Know content.

Be content.

We help you deliver rich and satisfying content experiences to maximize digital marketing ROI.

Or quickly try : CryptocurrencyClimate Changebuzzfeed.com

What YOU GET?

Get all the content marketing firepower you’ll ever need.

We turn your content calendars into long-lasting and immersive reading journeys.

Trusted by great companies

We’re into some serious number crunching

456,962,032

Articles

181,677,652

Influencers

466,904,619

Facebook Posts

Benefits

With Social Animal

You don’t have to be an expert to answer top-dollar content marketing questions.

Where do I even start?

So many keywords and topics to analyze.

How do I engage them?

Audiences can be unpredictable and difficult to reach.

Why can’t I?

My competitors are killing it with content.

What do I do?

Niche content curation is cumbersome and complicated.

Who do I pick to maximize reach

Influencers are a dime a dozen and hard to select the right one.

FEATURES

Accelerate your content marketing with AI & ML – led insights

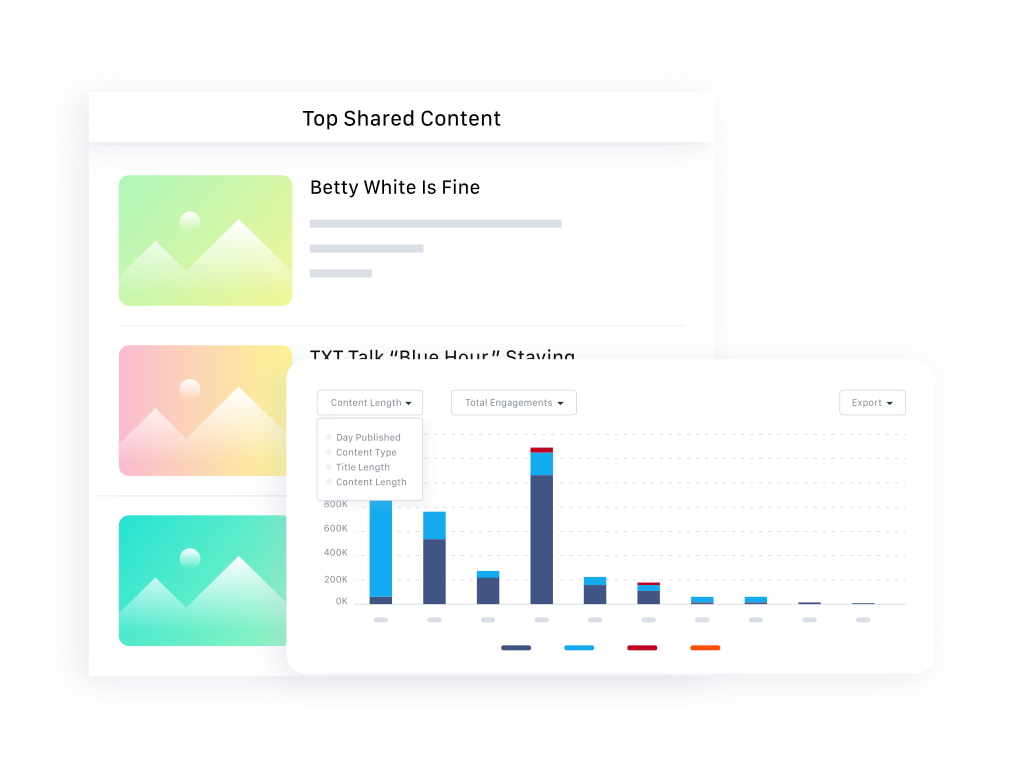

Get full content/social analysis in 10 seconds.

- Check historic metrics on keyword/website performance

- Analyze lengths, style, and the sentiment behind every single article

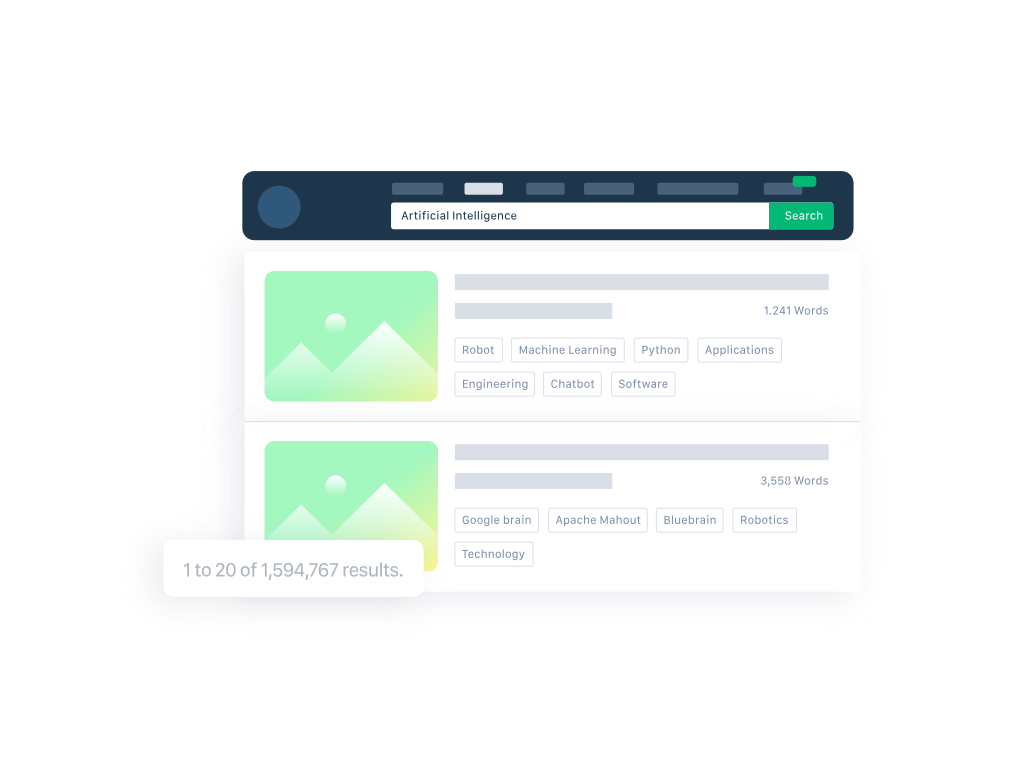

Explore millions of relevant articles for any keyword/ topic.

- Go beyond headlines with ‘Deep Search’ to unearth buried keywords

- See what’s trending in real-time

Access over 3 million profiles updated in real-time.

- Get an instant preview of profiles who share content like yours.

- Use filters to fine-tune searches and avoid generic recommendations.

Crush it on Facebook with on-demand content analytics.

- Analyze more than 100K Facebook pages and decode your competitors’ best-performing strategies.

- Go sky-high with Social Animal’s Facebook insights.

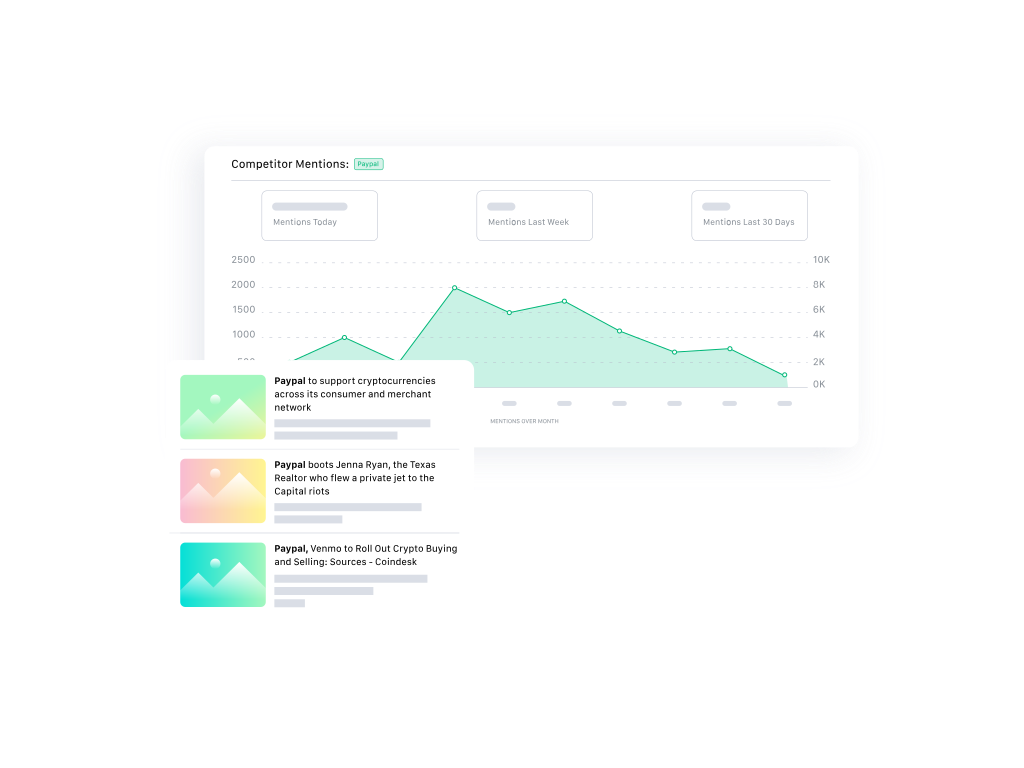

Understand how they create/ distribute content.

- Analyze top-performing articles and social strategies of competitors.

- Enable alerts and receive emails with competitor mentions every day.

Social Animal Has Been Featured In

Testimonials